|

|

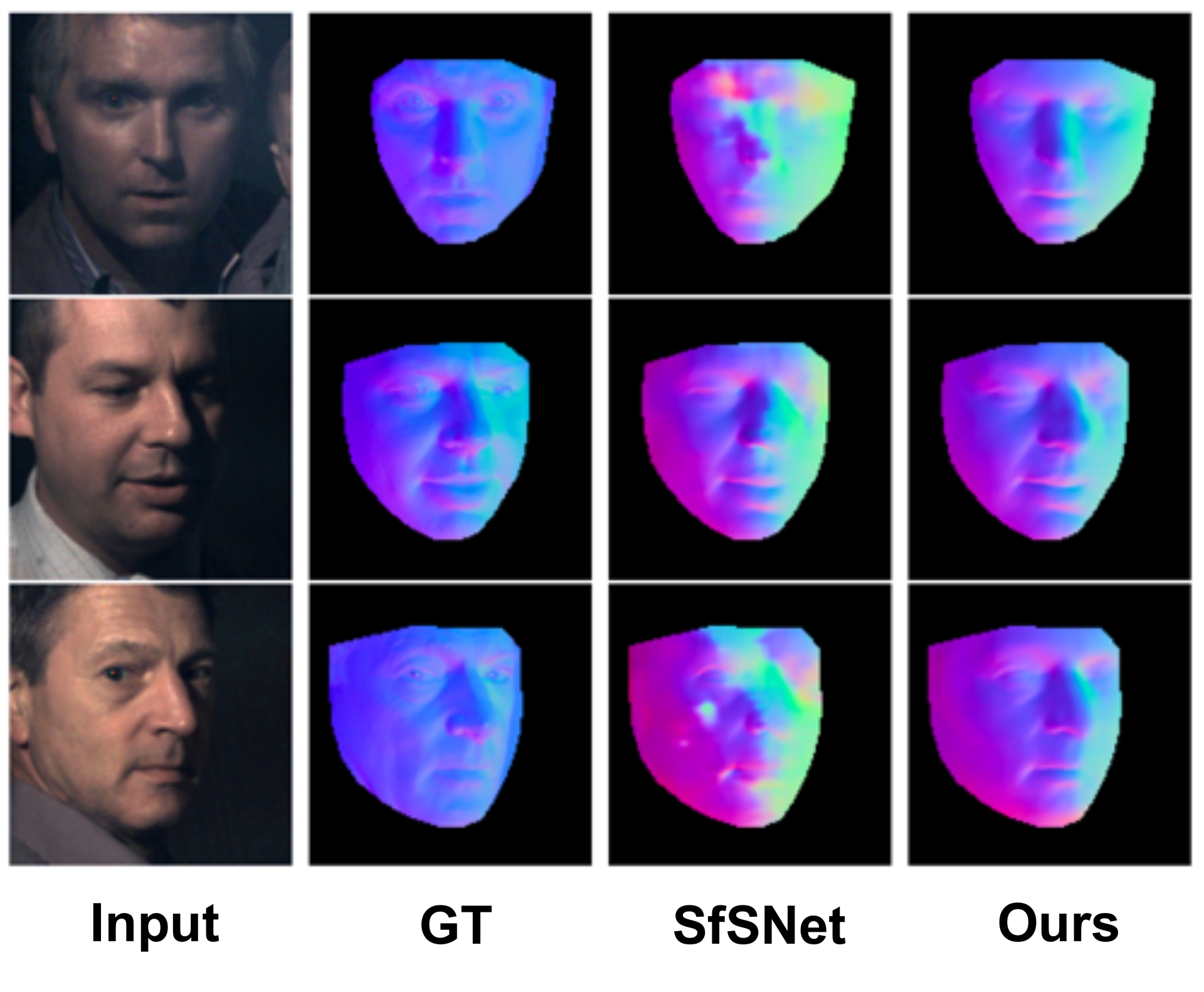

@InProceedings{PNVR_2020_CVPR,

author = {PNVR, Koutilya and Zhou, Hao and Jacobs, David},

title = {SharinGAN: Combining Synthetic and Real Data for Unsupervised Geometry Estimation},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}